This month’s blog was written by Mikael Linden, Senior application specialist at CSC - IT Center for Science, and co-Work Package Lead of CINECA WP2 - Interoperable Authentication and Authorisation Infrastructure. This blog is the third in our GA4GH standards series, presenting an overview of how GA4GH standards are being developed and implemented by CINECA. For the first blog in the series, giving a broad overview of how CINECA is facilitating federated data discovery, access and analysis, please see Dylan Spalding’s blog Implementation of GA4GH standards in CINECA.

Read MoreThis month’s blog was written by Lauren Fromont (CRG), a member of the EGA team at CRG and a member of CINECA WP1 - Federated Data Discovery and Querying. This blog is the second in our GA4GH standards series, presenting an overview of how GA4GH standards are being developed and implemented by CINECA.

Read MoreThis month’s blog was written by Dylan Spalding (EMBL-EBI), Coordinator of the European Genome-phenome Archive and co-WPL of CINECA WP4 - Federated Joint Cohort Analysis. This blog is the first in our new series, presenting an overview of GA4GH standards being developed and implemented by CINECA.

Read MoreThe final blog in our series on text-mining is a guest blog written by Shyama Saha, who specialises in Machine Learning/Text Mining at EMBL-EBI. The CINECA project aims to create a text mining tool suite to support extraction of metadata concepts from unstructured textual cohort data and description files. To create a standardised metadata representation CINECA is using Natural language processing (NLP) techniques such as entity recognition, using rule-based tools such as MetaMap, LexMapr, and Zooma. In this blog Shyama discusses the challenges of dictionary and rule-based text-mining tools, especially for entity recognition tasks, and how deep learning methods address these issues.

Read MoreThis post is part of a series on a text-mining pipeline being developed by CINECA in Work Package 3. In previous instalments, first, Zooma and Curami pipelines were explained in "Uncovering metadata from semi-structured cohort data". Then, LexMapr was introduced in "LexMapr - A rule-based text-mining tool for ontology term mapping and classification". In this third instalment we are going to explain the normalisation pipeline developed at SIB/HES-SO.

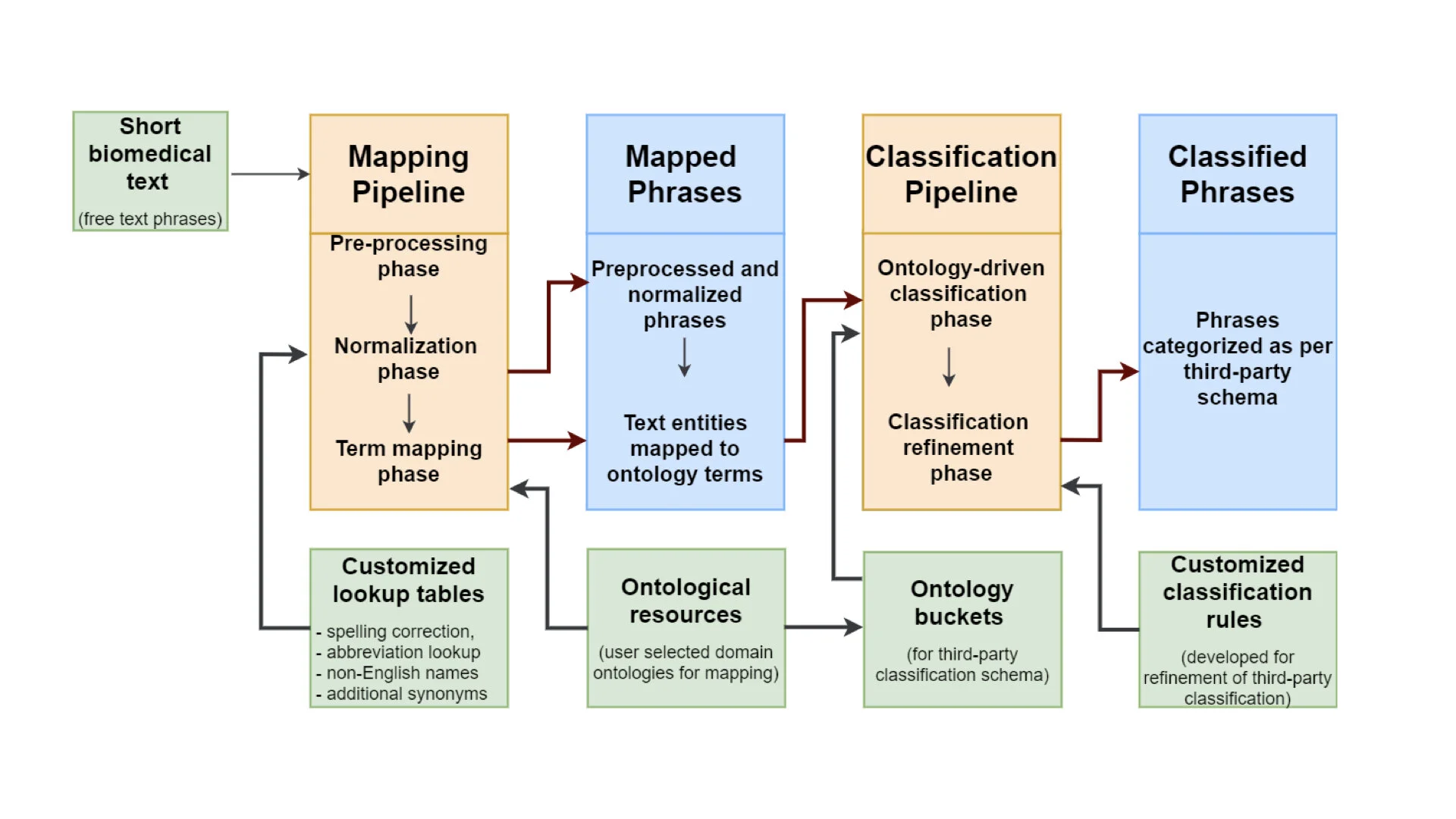

Read MoreThe initial focus of LexMapr development has been on providing a text-mining tool to clean up the short free-text biosample metadata that contained inconsistent punctuation, abbreviations and typos, and to map the identified entities to standard terms from ontologies. This blog is the second in a series on text-mining in CINECA. For the previous instalment "Uncovering metadata from semi-structured cohort data" please click here.

Read MoreHarmonisation of attributes across different cohorts is very challenging and labour intensive, but critical to leverage the collective potential of the data. The CINECA text mining group aims to provide common tools and methods to extract additional metadata from structured and semi-structured fields in cohorts’ data.

Read MoreDirect industrial participation in CINECA is made by the SMEs (Small and Medium-sized Enterprises) The Hyve and Clinicageno, both companies with an interest in bioinformatics applied to research and clinical genomic problems. The key background driver for their interest in a project like CINECA are the possibilities for long-term, sustainable profits in the areas of data-driven science and medicine in which the Hyve and Clinicageno specialise.

Read More